ALICE A Large Ion Collider Experiment

Hadron Physics Department - IFIN-HH

Contribution to ALICE Experiment @ LHC

Contribution to ALICE Experiment @ LHC

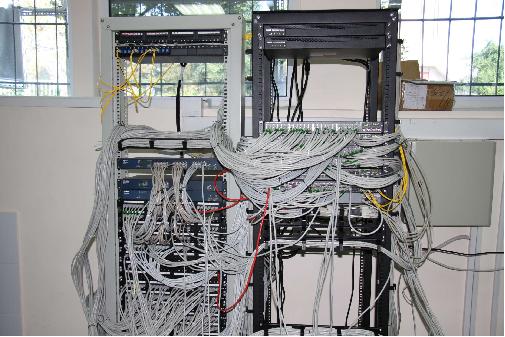

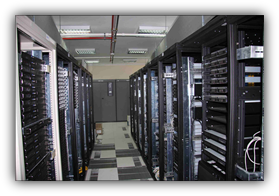

Data center

The "project" started in 2005, when appropriate funding become available

Distributed computing - 2005

NFS regularly used between user computers

MOSIX cluster

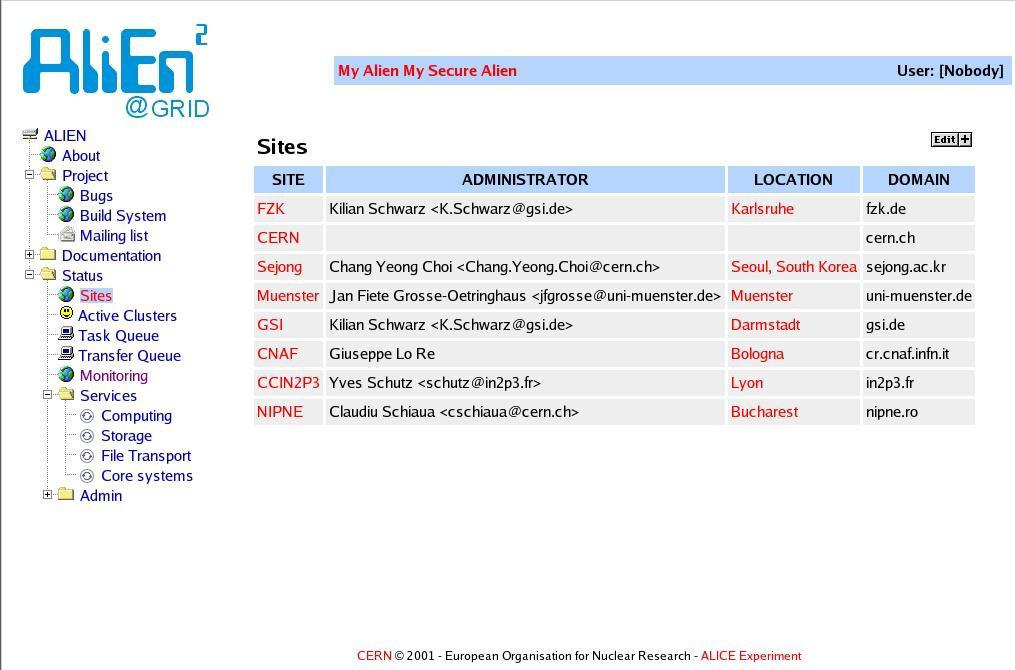

AliEn site

Distributed computing - past

HPD members regularly used clusters at international sites

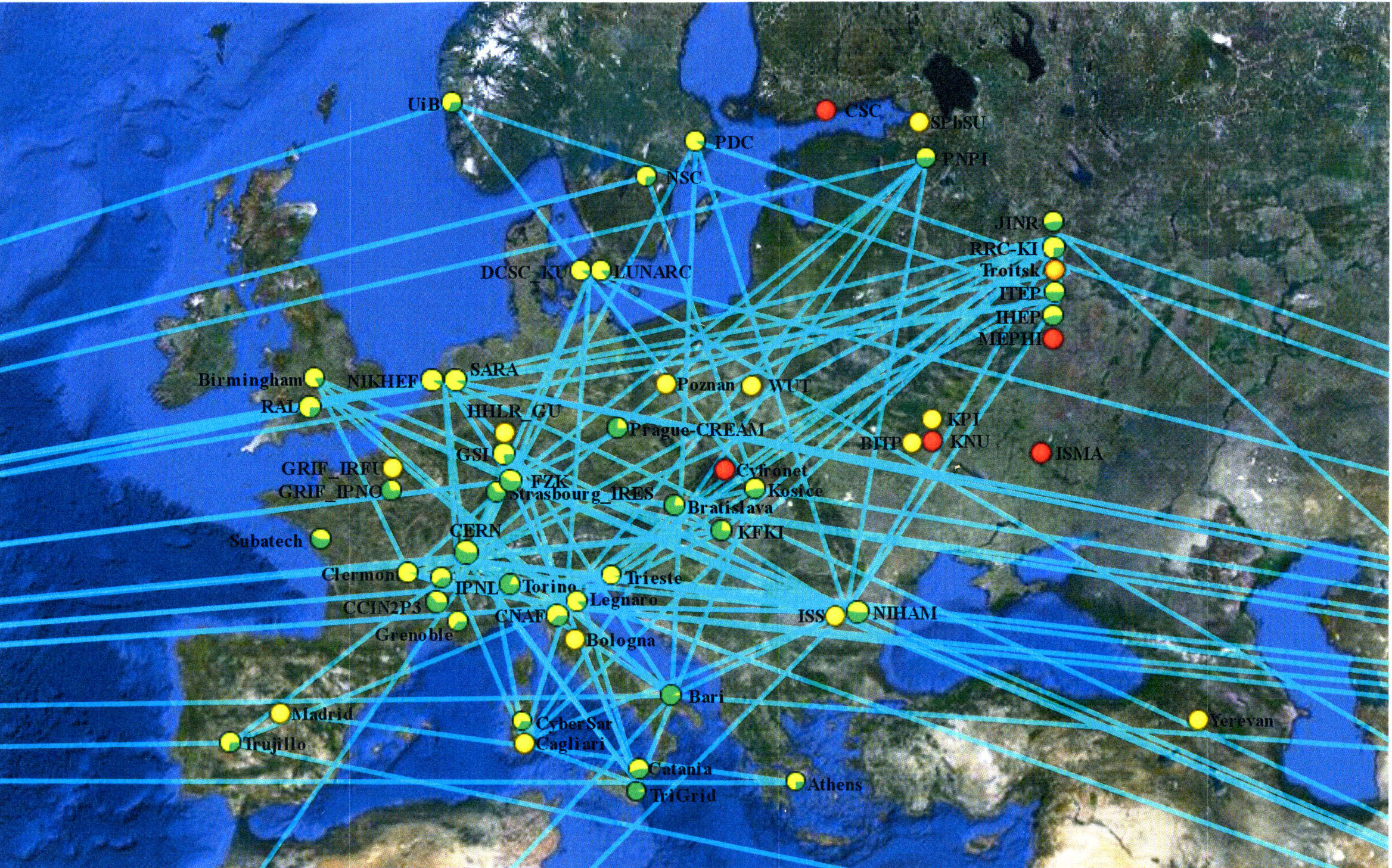

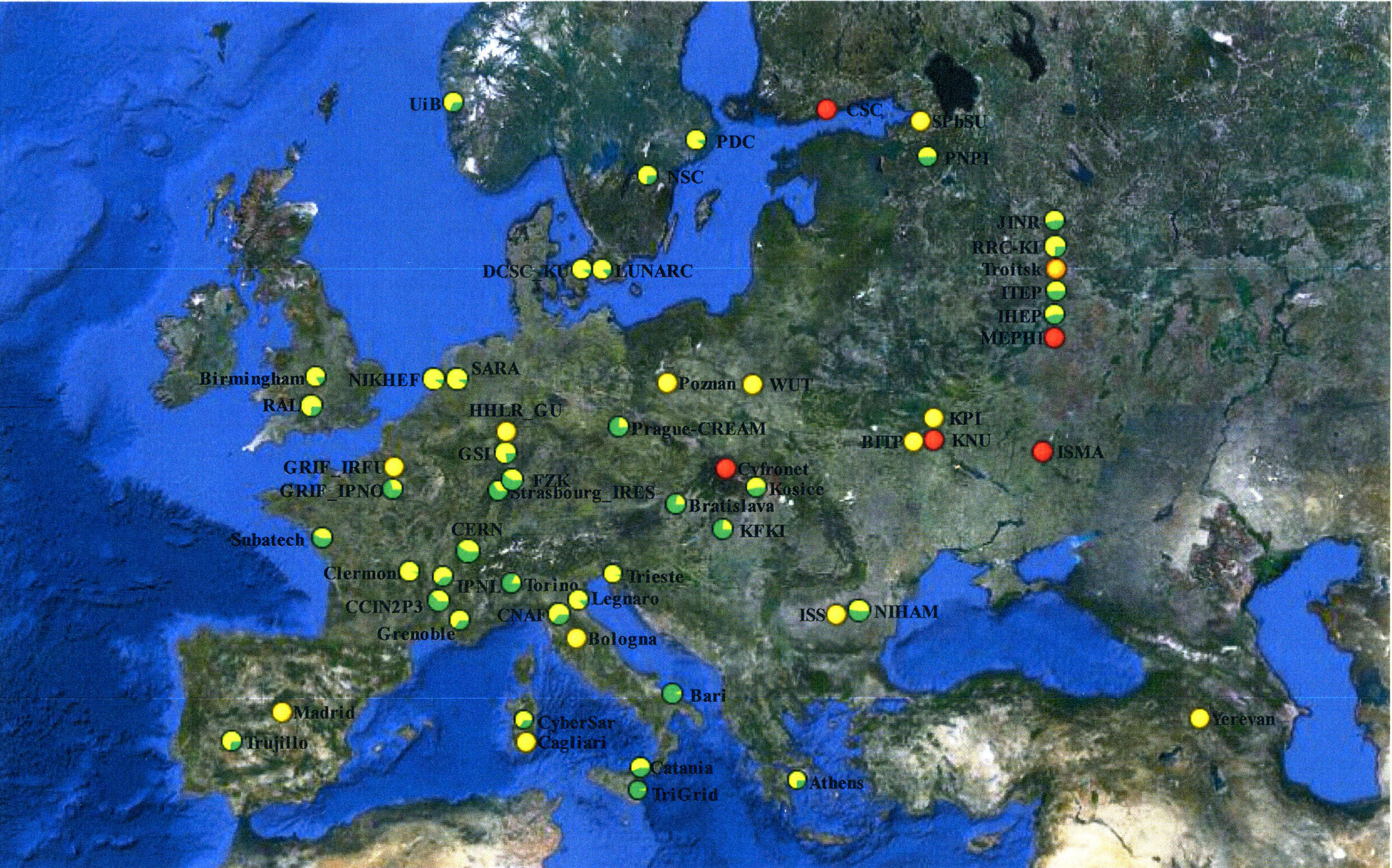

Our group is involved in Grid Computing activities.

Starting from November 2002 the Computing Cluster of our Centre of Excellence is included in the ALICE Grid Structure, using AliEn Grid Environment, like a Computing Element (CE), becoming the first international Grid application in Romania. The very first CE containde about 10 CPUs (AMD Athlon MP and Intel Pentium processors) with a total computing power of 5588 SI2k, and a total disk storage capacity of about 200GB. The communication between computing nodes was at 100Mb/s and the link to the Internet at 100Mb/s. At that time this configuration was used to run ALICE test jobs and Nuclear Structure large scale model calculations. Soon after this starting period the computing power was upgraded with about 40 CPUs, using dual machines based on Intel Xeon and AMD Opteron CPUs. The Storage Element (SE) for the ALICE Grid was increased by about 2TB and the link to the Internet provider at 1Gb/s. The final target being to have a Tier2 Centre by the time when the first experimental information will be delivered by the ALICE experiment at CERN.

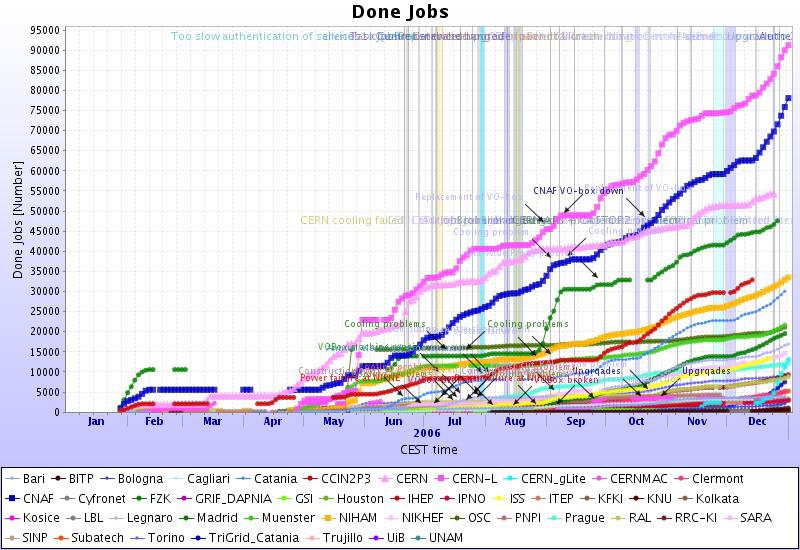

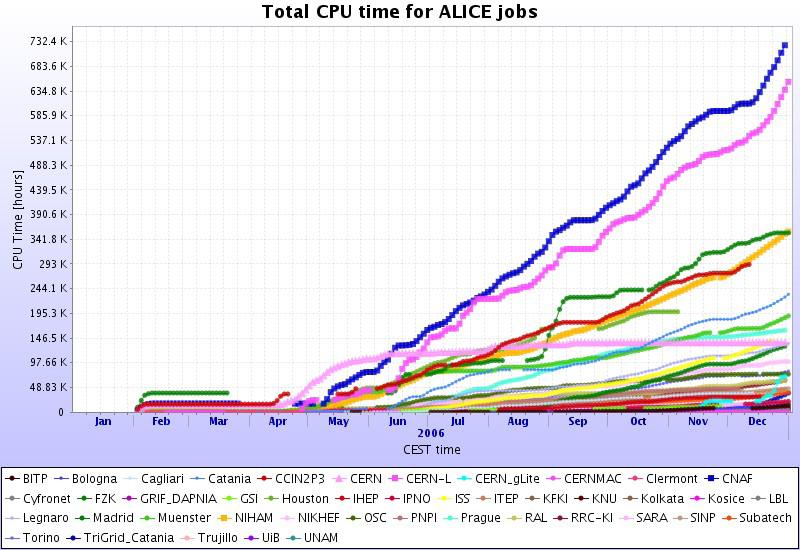

2006 takeoff

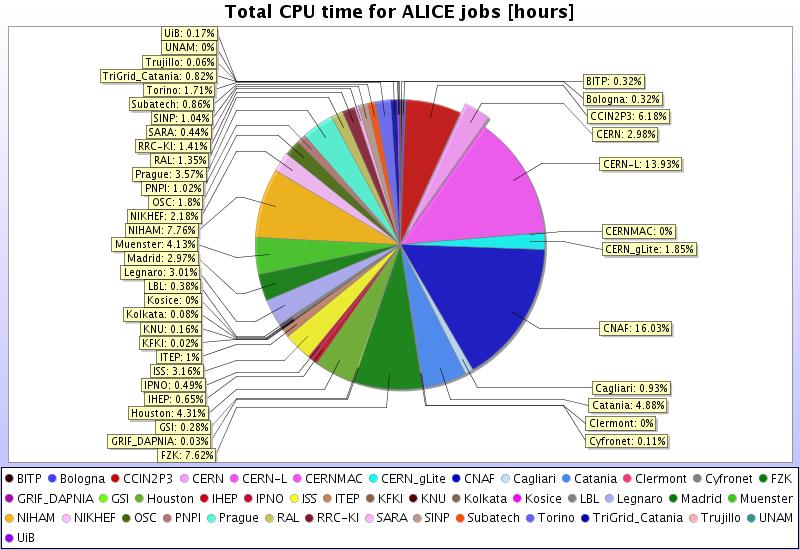

| ■ | ~ 200K CPU hours (~300 kSI2k hours) |

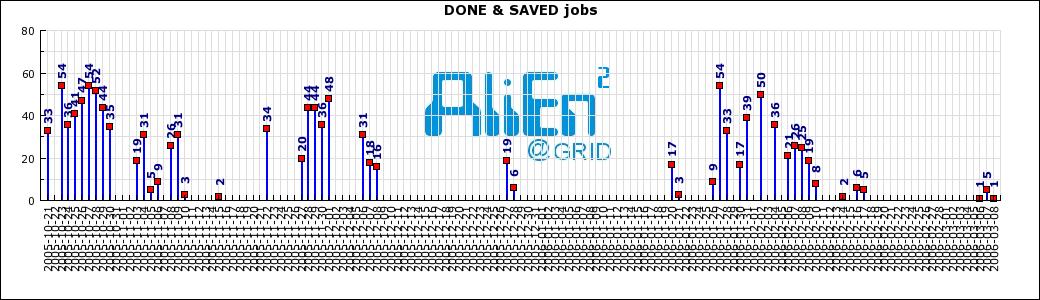

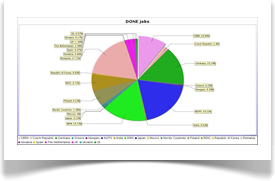

| ■ | 19 Kjobs DONE! - 6,8% |

| ■ | Last 4 months 11 TB saved @CERN |

| ■ | Average speed ~1MB/sec |

| ■ | Maximum speed ~48MB/sec |

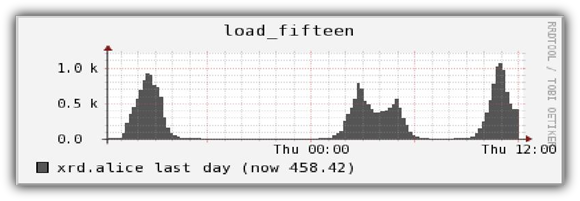

| ■ | 99,5% availability |

| ■ | The first production-grade cluster, 1 frontend machine (dual Xeon 3GHz, 2GB RAM, 2.4 TB raw HDD), 6 nodes (dual Xeon 3GHz, 4GB RAM) another 4 at the end of the year, all server-class, 32bit |

| ■ | 1 Gb/s network |

| ■ | Fortunately, we had a proper space within the Detector Laboratory, cooled by the Lab s unit |

2007 developments

- 15 new machines (dual dual-core Opteron, 2GHz, 8GB RAM)

- 48 TB raw SAS storage (new technology)

- 10 Gbit/s connection to DIC

- 48 TB raw SAS storage (new technology)

- 10 Gbit/s connection to DIC

- 40 new machines deployed during the year (dual Xeon 3.2 Ghz, 2 MB L2, 4GB RAM), 64 bit

- EGEE site

- Policy1: "Regarding GRID, there is nothing more important than having a running and used site"

- Policy2: try to find as fast as possible solutions for the problems showing up during production

- Policy3: exploit to the maximum extent the "dedicated" character of the site in order to achieve high stability and availability

- EGEE site

- Policy1: "Regarding GRID, there is nothing more important than having a running and used site"

- Policy2: try to find as fast as possible solutions for the problems showing up during production

- Policy3: exploit to the maximum extent the "dedicated" character of the site in order to achieve high stability and availability

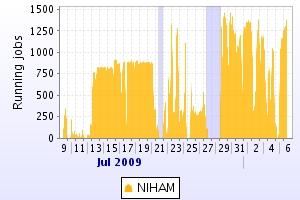

| ■ | ~ 1000 kHours (delivered) |

| ■ | ~ 130 Kjobs DONE |

| ■ | ~ 4-6% of ALICE cumulated for last 2years |

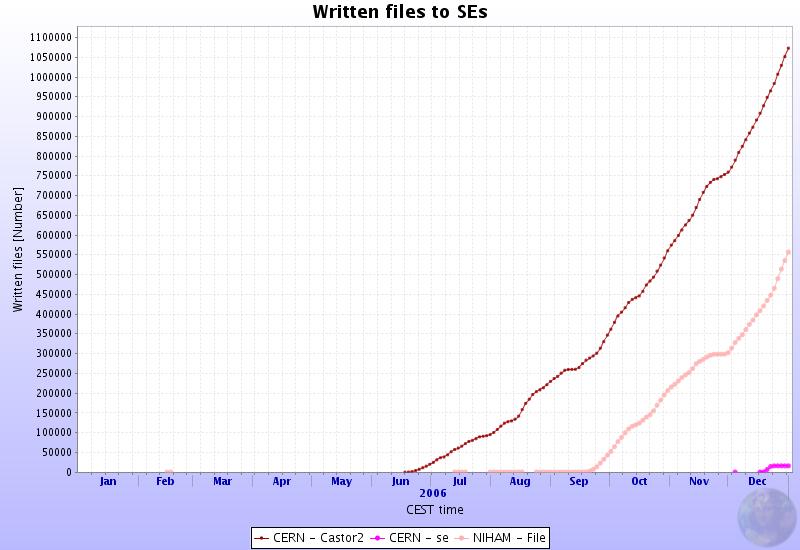

| ■ | ~ 3360000 files saved to NIHAM SE |

| ■ | Average Site Services Availability since Iuly 1, 2006 :>95% |

Results

Results

~33500 jobs DONE

~360 kHours CPUTime

~7% of ALICE

Starting with September 2006, NIHAM

Storage Element was used by ALICE production jobs to store log files

~360 kHours CPUTime

~7% of ALICE

Starting with September 2006, NIHAM

Storage Element was used by ALICE production jobs to store log files

Results

Among the firsts AliEn2 sites

In production since september 2005

838 jobs done

In production since september 2005

838 jobs done

The most important development: NIHAM Datacenter, industrial grade cooling unit, industrial-grade UPS-es, Cat. 6 cabling, connection to Institute's Diesel generator.

600 kW

- control and monitoring

via ethernet

via ethernet

Modern Diesel generator

2008

Computing power: 36 servers

Storage: 200 TB - HDD

Storage: 200 TB - HDD

the increase of the software efficiency and performance of the

new processors shown evidenced an insufficient storage capacity

of NIHAM Data Centre

new processors shown evidenced an insufficient storage capacity

of NIHAM Data Centre

A unbalanced computing power vs. storage capacity has as

consequence:

- overload of the existing storage servers

consequence:

- overload of the existing storage servers

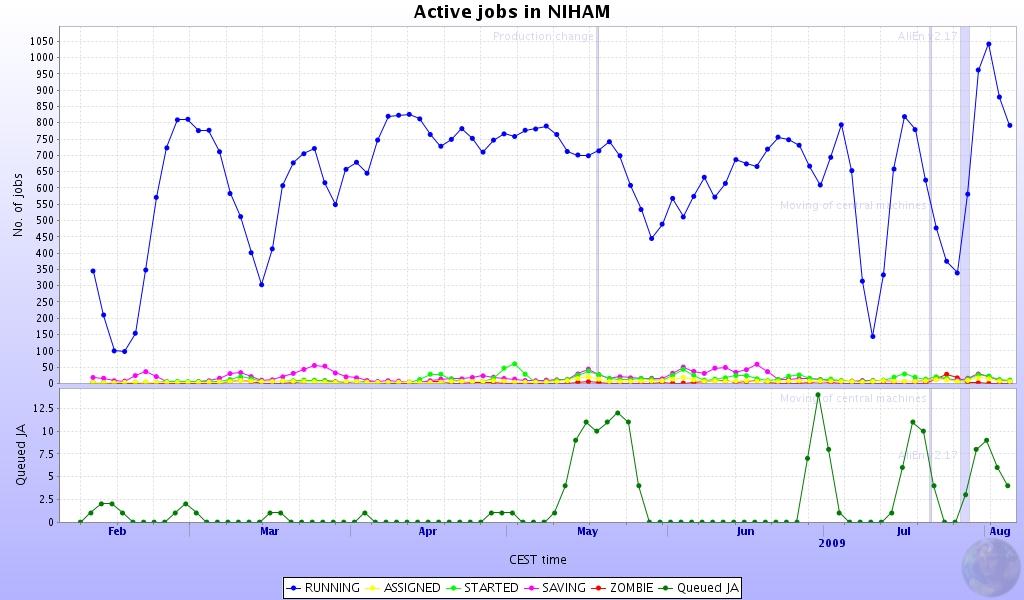

2009

| • | decrease of their performance |

| • | network overload |

| • | lower efficiency in using the existing computing power |

A back-up computing power in case of interventions

An upgrade of NIHAM network for supporting the present needs and developments

Extension of the storage capacity ~ 500 TB

Computing servers - 10 servers

Extension of the local network infrastructure - 4 switches & accessories

2010 - 2011

~ 2000 CPU cores

~ 1 PB storage

~ 1 PB storage

Results

New cooling unit

- temperature

- humidity

- fresh air

Switches

NIHAM